Environmental risk assessment only goes as far as data availability allows it. In this post, ecotoxicology student Larissa Herrmann shares her research project in the MAGIC working group at Uni Koblenz-Landau. She gained insight into programming in Python and R with the goal to make ecotoxicological data readily available for meta-analyses and risk assessments. Ultimately, a tool is developed that is applicable to a broad spectrum of pesticide registration reports.

The research project course (RPC), a central part of the MSc Ecotoxicology program, offers an opportunity to discover the different working groups associated to environmental sciences and ecotoxicology at the University of Koblenz-Landau. During the RPC, one gets to conduct a self-reliant scientific project – a perfect way to prepare for the master’s thesis. Within this framework, the Ecosystem Resilience team, led by Ralf Schulz, offers projects including the meta-analysis of environmental data or global pesticide exposure assessment.

At the MAGIC working group (“Meta-Analysis of the Global Impact of Chemicals”, Ecosystem Resilience team), I conducted my RPC in my third semester of studying Ecotoxicology. The methods of the project I decided to work on were not the standard laboratory or literature research tasks that would usually be expected to be eligible in this master’s program. Instead, I was asked to retrieve test results – endpoints to be more precise – from ecotoxicological studies published in the official documentation for registered pesticides in Europe by the European Food Safety Authority (EFSA). These endpoints deliver the basis for environmental risk assessment based on laboratory and/or field studies. However, to conduct a risk assessment based on these endpoints, they have to be accessible through, for example, electronic databases, which are not necessarily complete. Therefore, extensive manual studying of these documents would be necessary, which would be very cumbersome work.

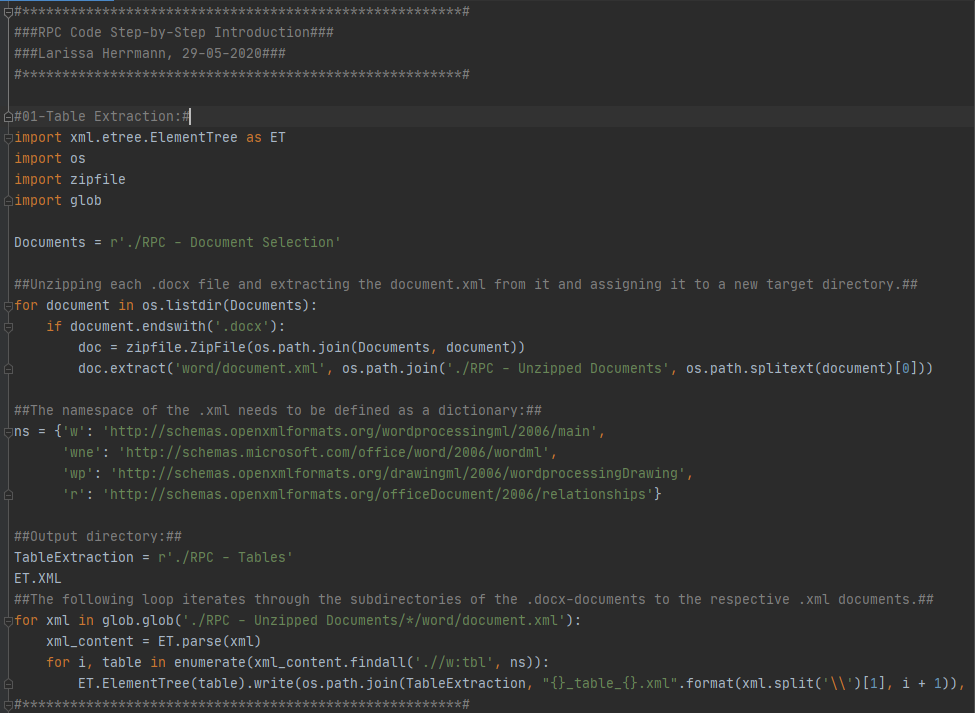

At this point, programming comes into place. Without any previous knowledge of the topic, my task was to attempt the development of an automated approach identifying and extracting endpoint data. The programming language of choice was Python, an easy-to-learn, open source-based application, thereby providing a significant learning surplus additionally to R, frequently used throughout this master’s degree. After a decent amount of time studying the basics of Python programming, general text document structure and the specific construction of EFSA documents, a first approach to identify the endpoint data within these documents could be targeted. Throughout the process, however, two obstacles became apparent: First, the presentation of the endpoints was inconsistent throughout documents over the years. Second, several problems occurred during the processing of the documents for automated data retrieval.

One part in the process that I would like to highlight, besides the new experiences going along with acquiring new skills, is the approach that was supposed to lead to identifying the endpoint data within the EFSA documents. For this purpose, a proximity analysis based on Natural Language Processing (NLP) was conducted wherein individual words are assigned indices according to their occurrence frequency and are hence analyzed over a set of documents to classify similar text parts. This approach was, however, only successful for as long as the text basis was sufficiently processed, which is where this project kept some pitfalls ready to be explored throughout the development. One of these was the conversion of the registration documents that was required to bring the documents from the format they were presented in into one that could be adequately processed.

Throughout the process and challenges along the way, I was offered a great amount of help and advice by my supervisors from MAGIC. This support enabled me to develop the process development to a point where it was possible to automatically identify and extract tables from a given set of EFSA documents and to set a starting point for their further analysis and the extraction of relevant endpoints. I am currently in the process of initiating my master’s thesis following up on my RPC research. The development so far will be refined in terms of the conversion accuracy of the documents; the identification of the endpoints and their locations in the document will furthermore be extracted using a supervised-learning approach.

It finally remains to say that the task I was lucky enough to attack, was immensely interesting for me, being a first-time programmer, offering a good amount of challenges and opportunities to learn and improve. As an alternative to projects in laboratories, this field of research implementation introduces future ecotoxicologists and environmental scientists to the world of programming, an imaginably contemporary topic.